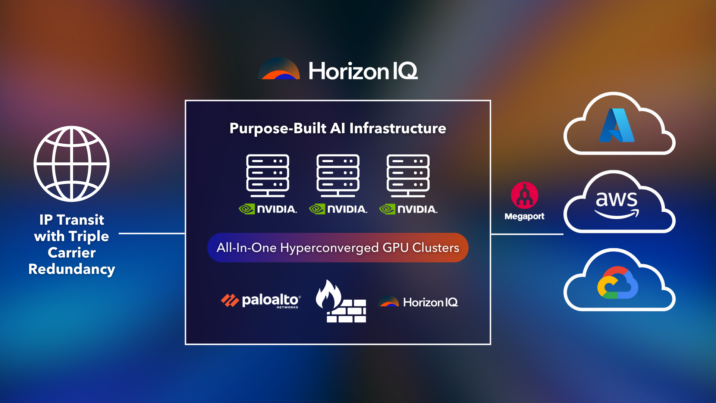

High performance AI infrastructure doesn’t have to be expensive

Do you have mature AI applications that are ready for consumption by your customers? HorizonIQ provides NVIDIA-powered small to medium-sized GPU clusters that provide a foundation to deploy these applications in a single-tenant environment at 50% of the cost of major public cloud providers.

.HorizonIQ can deploy clusters as small as three nodes with a total of 3 GPUs for lightweight AI deployments. We can scale up to hundreds of GPUs for larger scale AI applications.

Find the best GPU for your workload

Choose your path

NVIDIA H100

High-performance accelerator designed for AI, HPC, and data analytics workloads. Built on the Hopper architecture, it delivers exceptional throughput for training large language models, accelerating inference, and powering complex simulations.

$1500* /month

Supported Use Cases

- Large Language Model (LLM) training

- AI inference at scale

- High performance computing (HPC)

- Data analytics and graph processing

NVIDIA A100

High-performance compute for AI, HPC, and analytics. Delivers up to 20X the speed of prior generations. Perfect for model training, simulations, and big data.

$640* /month

Supported Use Cases

- AI training and inference

- Deep learning training and inference

- High performance data analytics

- Scientific simulations

- Medical imaging processing

NVIDIA L40S

Versatile GPU for generative AI, Small Language Models (SLMs), and 3D workloads. Combines powerful compute with advanced graphics and media acceleration in a single platform.

$500* /month

Supported Use Cases

- Generative AI

- Large language model (LLM) inference

- Small-model training

- 3D graphics and rendering

- Video encoding and decoding

*The prices listed above are for the GPU hardware only. A fully configured system—including servers, storage, and networking—will be required to run these GPUs. Contact us for complete system pricing and tailored solutions.

The right GPU for every need

Build the most powerful AI and graphics applications

Single-Tenant GPU Servers

Your GPU is 100% dedicated: no shared resources, no noisy neighbors, and no performance limits. Experience true single-tenant GPU hosting for consistent, high-performance workloads.

Full GPU Access and Control

Gain unrestricted access to all GPU resources. Build, train, and deploy your AI, HPC, or 3D applications on infrastructure you fully control.

Predictable Pricing

Avoid large capital expenditures and unpredictable billing. Our monthly pricing model is more cost-effective than usage-based cloud GPU pricing. Ideal for steady, resource-intensive workloads.

High Density Deployments Available

Deploy up to 2 GPUs per server for maximum density and performance. Perfect for scaling AI training, rendering, or scientific computing environments.

Modular Infrastructure Compatibility

Pair your GPU server with HorizonIQ firewalls, load balancers, and centralized storage for a complete, scalable infrastructure solution — all managed under one roof.

Built-In Security and Compliance

Train sensitive AI models with peace of mind. Our private, single-tenant environment enhances data security and helps ensure compliance with standards like HIPAA, SOC 2, and PCI DSS.

See how NVIDIA GPUs power innovation across industries

Accelerate Your Next-Gen Technologies

Finance and Fintech

Risk Modeling

Model complex financial scenarios with precision and speed using the NVIDIA H100. Its massive memory bandwidth and transformer engine make it ideal for Monte Carlo simulations, portfolio optimization, and HPC workloads in finance.

Algorithmic Trading

Drive low latency trading strategies with the NVIDIA H100, offering breakthrough performance per watt and real-time compute capabilities critical for high-frequency financial environments.

Fraud Detection

Detect fraudulent behavior in real time using NVIDIA L40S GPUs. Optimized for inferencing and AI workflows, L40S enables scalable, AI-powered analysis across massive transaction datasets.

Healthcare and Life Sciences

AI-Powered Diagnostics

Leverage the power of NVIDIA H100 to run deep learning models on diagnostic images, speeding up radiology workflows with more accurate results and reduced model training time.

Drug Discovery

Accelerate molecular simulation and AI-driven analysis of compound libraries with NVIDIA A100, purpose-built for double-precision computing and high-throughput workloads common in life sciences.

Medical Imaging Processing

Enable real-time processing of high-resolution imaging data at scale using NVIDIA L40S, which balances AI inference power and rendering capabilities for clinical environments.

Research and Development

Scientific Simulations

Run physics, chemistry, and climate models at unprecedented scale with NVIDIA H100. Its next-gen tensor cores and memory architecture accelerate floating-point simulations for cutting-edge research.

Big Data Analytics

Extract deeper insights from massive datasets using NVIDIA L40S, combining high memory bandwidth with AI-focused architecture to support parallel data processing and visualization.

Machine Learning Research

Shorten AI development cycles by training large language models and deep neural networks using NVIDIA A100—a proven choice for advanced ML workloads including NLP, computer vision, and reinforcement learning.

Empowering your success

The HorizonIQ difference

Cost Efficiency

Achieve up to 70% cost savings with our proprietary tools, optimized pricing models, and cost-efficient private cloud, bare metal, and GPU server solutions.

100% Uptime SLA

Your operations demand reliability. Our industry-leading 100% uptime SLA provides uninterrupted performance, backed by redundant systems and proactive monitoring.

Hardware Customization

Choose from 50 different CPU options, including Intel Xeon, Xeon Gold, and AMD EPYC servers. Configure virtually limitless RAM and storage options to match your AI, HPC, database, and cloud-native workloads.

Global Reach

Deploy your infrastructure where it matters most. With nine strategically located regions, we ensure low latency, high availability, and seamless scalability for your business.

Secure and Compliant

Our single-tenant infrastructure eliminates the security risks of shared environments, ensuring enterprise-grade compliance for industries requiring HIPAA, SOC 2, ISO 27001, and PCI DSS standards.

Proactive Management

Our Compass portal provides real-time visibility, cost control, and automated infrastructure management–giving you full control over your IT environment.

Dive deeper

Explore FAQs

We currently offer three types of NVIDIA GPUs. The L40S is the all-around workhorse suited for a variety of use cases including AI inference and training, 3D graphics and rendering, and video encoding/decoding. The A16 powers virtual desktop environments in a cost-efficient manner. The A100 is focused on demanding AI, High Performance Computing (HPC), and analytics use cases. Please contact our sales team and they can help you find the best GPU for your business.

While CPUs can handle many AI inference use cases, GPUs must be utilized for AI training. GPUs are also much more efficient for graphics and video use cases.

We currently support Windows, Ubuntu, Debian, AlmaLinux, Red Hat, Proxmox, and VMware ESXi.

Yes, you can select the geographic regions that best meet your performance, regulatory, and latency requirements for hosting GPU servers. The A16, A100, and L40S GPUs are available at all our London, Amsterdam, Chicago, New Jersey, Seattle, Dallas, Phoenix, and Silicon Valley data center locations.

HorizonIQ offers either a 1Gbps or 10Gbps uplink with 10TB of outbound bandwidth included with each server. All inbound transfers are 100% free. Higher outbound bandwidth can be purchased. Outbound bandwidth exceeding the contracted amount is billed based on actual usage.

Yes, any of our Bare Metal with GPU configurations can be customized. Please contact us to learn more.

We currently support a maximum of two GPUs per server. However, we are working to expand that number in the future.

We offer SATA, SSD, and NVMe hard drives with capacities up to 12TB per drive. Please contact us to learn more.

Yes, for an additional charge, we can work with you to manage your Bare Metal with GPU infrastructure.

Absolutely. With our Spend Portability program, we offer the flexibility to change your infrastructure as your business needs also change.

Contact us to

learn more

Whether it’s machine learning, natural language processing, robotics, or something else, we have a GPU powered server for your AI and HPC applications.